What is Pydantic? Validating Data in Python

Poor-quality data is everywhere. It can come from end-user inputs, internal or third-party data stores, or external API callers. Even worse? Poor-quality data is expensive. Gartner estimates that data quality issues cost companies up to $13 million every year.

The best way to prevent bad data is to stop it at the gates - i.e., to ensure data quality at the point of ingestion. But writing good quality data checking code is hard and, often, tedious. Plus, in the absence of a defined validation framework, it’s hard to standardize and reuse that code.

That’s why, here at Prefect, we’re huge fans of Pydantic. In this article, I’ll talk about what Pydantic is, how to use it in your code, and how to couple it with Prefect to reduce application errors and improve data quality in your most critical workflows.

What is Pydantic?

Pydantic is a data validation package for Python. It enables defining models you can use (and reuse) to verify that data conforms to the format you expect before you store or process it.

Pydantic supports several methods for validation. At its base, the package uses Python type hints to ensure data conforms to a specific type - such as an integer, string, or date. Take this example from the Pydantic documentation:

1class User(BaseModel):

2 id: int

3 name: str = 'John Doe'

4 signup_ts: datetime | None

5 tastes: dict[str, PositiveInt]This code uses Pydantic’s BaseModel class to create a subclass - a model that represents your application’s data. In this case, it specifies that a User consists of the following data:

- The field id must be an integer (and is required).

- The field name must be a string. If you don’t supply a string, it’ll use the good ol’ standby John Doe as a default value.

- The field signup_ts, if it has a value, must be a date/time. However, it can also be null (None in Python).

- The field tastes is a dictionary whose keys are strings and whose values are positive integers.

If your data fails to conform to these types, Pydantic will throw an exception. You can catch this exception in your code and decide how to handle the request (e.g., by returning an error message to the caller).

While Pydantic performs data validation, it also by default will attempt data conversion. For example, if you type a field as an int but you supply a string, Pydantic will attempt to coerce the string value into an integer. It’ll only fail if the value is a non-integer string - like a name or freeform text.

(Not what you want? No worries - you can change this behavior by enabling strict mode in Pydantic.)

Pydantic also supports a number of additional features, including:

- Serialize/deserialize JSON between Python dictionaries and JSON

- Creating Enums to represent choices, improving both type safety and code readability

- Validate data against both built-in data types as well as custom types

- Add custom validator type to models, fields within models, and even custom types

Use cases for Pydantic

So what do you do with Pydantic? The good news is that’s a versatile tool that you can use no matter your engineering specialty. Here are a few top-line cases - and how we take advantage of these capabilities in Prefect:

Input validation. Application and platform engineers can use Pydantic to ensure that inputs are of the type and format that your application expects. For example, you can verify that a user wrote their email address in valid RFC 5322/6854 format.

You can also use input validation at the API level to ensure clients are sending the correct inputs. An example might be ensuring a unique ID from an external system is in proper UUID format.

(Note that Pydantic supports both e-mail addresses and UUIDs as built-in field types. I.e., you can add these checks to your app with just a few lines of code.)

Validating and cleaning data before import. Enforcing data quality in data pipelines is an effective method for improving your system’s overall data quality. Unfortunately, not every data pipeline tool provides an easy way to enforce type and formatting constraints.

By using Pydantic in their data pipelines, data and analytics engineers can validate that a source meets data quality guidelines before importing its data into a warehouse. If your validation code detects an error, you can raise an event, stop the pipeline, and work with your upstream providers to fix the issues at the source.

Settings management. Errors in settings files - e.g., malformed access keys, bad environment values - can cause an application to grind to a halt. For example, imagine someone fat-fingers the word “prod” in an environment variable as “prdo” - and all of your database connection strings that use the environment name as a key fail as a result.

Pydantic’s BaseSettings class easily enables loading settings from either environment variables or a secrets file, validating the values according to your specifications. Take the previous example. Instead of making the environment name a string, you could render it as a Pydantic enum, ensuring that the setting is one of a handful of static choices:

1from enum import Enum, IntEnum

2from pydantic_settings import BaseSettings, SettingsConfigDict

3

4class Env(str, Enum):

5 dev = ‘dev'

6 test = ‘test'

7 stage = ‘stage’

8 prod = ‘prod’

9

10class Settings(BaseSettings):

11 env: EnvHow Prefect leverages Pydantic

Like I said, we love Pydantic. That’s why we use it everywhere!

Prefect takes advantage of several of Pydantic’s capabilities to provide a high-quality workflow orchestration tool to our customers:

- Our own internal input validation and type checking. We also make Pydantic a part of our runtime environment for workflows, so that you can easily add your own input validation to any of your background tasks.

- All of our blocks - pre-built configuration formats for storing secrets and interacting with external systems - are built as Pydantic models.

- Our interactive workflows feature, which enables building workflows that accept typed input at runtime, accepts Pydantic models.

- Our API utilizes Pydantic and FastAPI to ensure proper type-checking of all input from API callers and our own CLI.

- We store task results in blocks using a JSON serializer that supports all Pydantic types.

Pydantic in action

Let’s step through an example of Pydantic in action. Say you’re processing a backend workflow that validates a user’s information when they open a new account.

First, you need to install Pydantic with pip: pip install pydantic

Next, you’ll create your base model representing your customer. To keep things simple, assume we only need three fields:

- Full name

- Email address

- The user’s IP address

To model this, you’ll create a subclass of Pydantic’s BaseModel class containing the fields you need and their corresponding types:

1from pydantic import BaseModel, EmailStr, IPvAnyAddress, ValidationError

2

3class Customer(BaseModel):

4 fullName: str = "John Doe"

5 email: EmailStr

6 ipAddress: IPvAnyAddressThat’s it! You’ve created your first model. To run it, create a dictionary of values you can pass to the Customer constructor:

1data = {

2 "fullName": "Jane Doe",

3 "email": "jane_doe@foo",

4 "ipAddress": "1812.244.0.1"

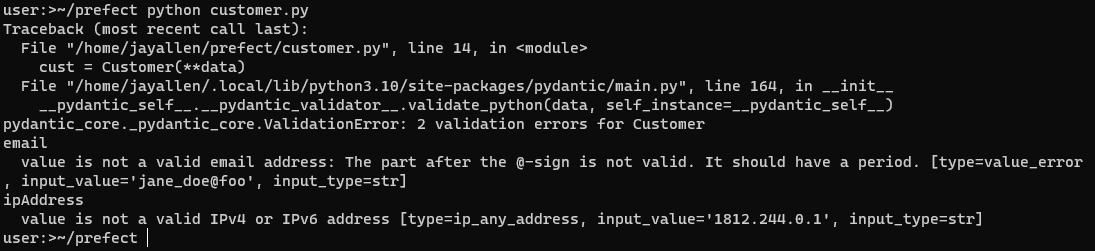

5}You’ll notice I’ve deliberately muddied these values. The email address doesn’t have a valid domain and 1812 in the IP address is most certainly not an 8-bit integer.

Let’s see what happens when you try and instantiate this class with these values: cust = Customer(**data)

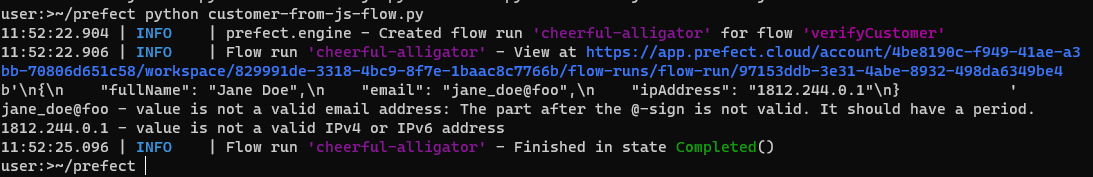

If you run this on the command line, here’s what you should see:

Pydantic clearly and helpfully tells you which values didn’t conform to your type specifications. Better yet, you accomplished this with barely writing any code. Pydantic’s built-in types did most of the heavy lifting for you.

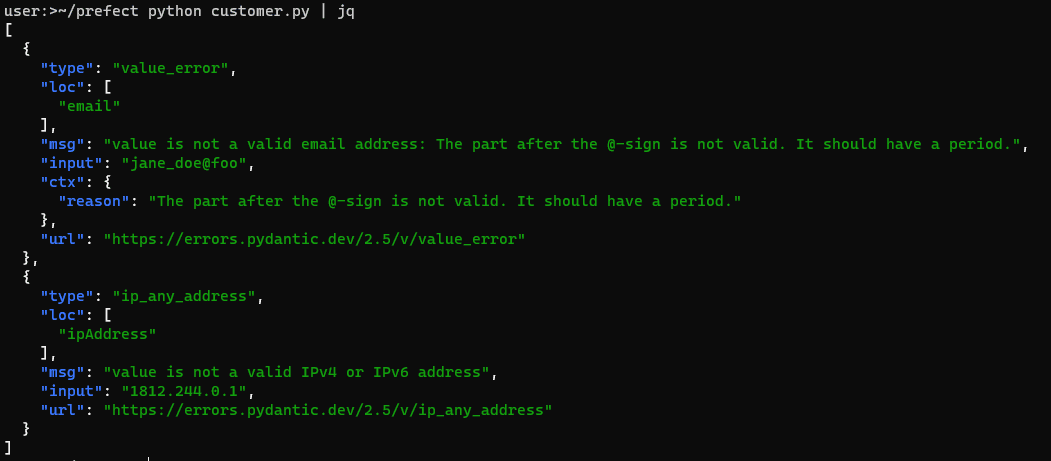

Need to format the results better for logging or user feedback? Easy enough - just wrap the creation of the Customer object in a Python try/except block:

1try:

2 cust = Customer(**data)

3except ValidationError as e:

4 print(e.json())If any validation check fails, Pydantic will return a ValidationError object that contains an array of errors. The code above converts this to JSON so you can see how Python formats each error object:

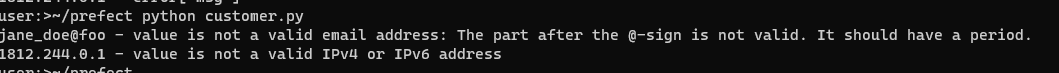

You can also iterate through the list of errors using the errors() method to create your own error output:

1try:

2 cust = Customer(**data)

3except ValidationError as e:

4 for error in e.errors():

5 print(f'{error["input"]} - {error["msg"]}')

Why use Pydantic?

Strictly speaking, do you need Pydantic to perform data validation? Well, no. You can always roll your own testing framework. But using Pydantic gives you:

- A Pythonic approach to data validation. It leverages Python’s built-in features and syntax to work seamlessly with the language. If you know Python, using Pydantic is easy.

- Extensibility. You can use Pydantic to produce any complex validation your app requires.

- High performance. In Version 2, Pydantic was ported to Rust, which boosted its performance up to 50x faster than Version 1. Pydantic also supports its own super-fast, built-in JSON parser.

- Self-documentation. Building Pydantic into your apps turns their assumed preconditions into explicit contracts. Defining your validation code in structures such as classes and Enums also makes your code’s intent more obvious and easier to read.

- Stability. Pydantic is actively maintained by a vibrant open-source community and is used by some of the biggest names in tech.

Using Pydantic with Prefect

We use Pydantic for all the reasons above. This is also why we make it available to our users to enhance their own workflows.

Prefect is a workflow orchestration and observability platform that provides a centralized, consistent approach for running a variety of scheduled and event-driven application workloads. With Prefect, you can run your background tasks as Python scripts, serverless functions, or Docker containers, and manage them no matter where they run.

To see how this works, let’s say you want to take your code above and do the following:

- Parse a new customer record from a JSON file (e.g., a file uploaded to an object storage container like Amazon S3)

- Parse, validate, and take action on this customer record (e.g., trigger an e-mail verification workflow) whenever an event or API call occurs

First, you can modify your Pydantic code to load and verify a customer record from JSON. In the code below, I use a static file in Amazon S3 as an example. Once you load the JSON file from the URL, you can use the static method model_validate_json() on your Pydantic model to create a new Customer object. (Your model inherits this method from BaseModel.)

1from pydantic import BaseModel, EmailStr, IPvAnyAddress, ValidationError

2from urllib.request import urlopen

3import json

4

5class Customer(BaseModel):

6 fullName: str = "John Doe"

7 email: EmailStr

8 ipAddress: IPvAnyAddress

9

10try:

11 url="https://jaypublic.s3.us-west-2.amazonaws.com/customers.json"

12 response = urlopen(url)

13 cust_data = response.read()

14 print(cust_data)

15 cust = Customer.model_validate_json(cust_data)

16except ValidationError as e:

17 for error in e.errors():

18 print(f'{error["input"]} - {error["msg"]}')Next, you can turn this into a Prefect workflow and run it in response to an event - such as an upload of a file to Amazon S3 - or an API call from an external application. To do so, install Prefect into your machine or virtualenv: pip install -U prefect

Running this in Prefect entails creating a flow - a function that takes input, performs some work, and returns output. Our Quickstart will give you more information on how to get started with flows. Briefly, here’s how your code would change:

1from pydantic import BaseModel, EmailStr, IPvAnyAddress, ValidationError

2from urllib.request import urlopen

3import json

4from prefect import flow, task

5

6class Customer(BaseModel):

7 fullName: str = "John Doe"

8 email: EmailStr

9 ipAddress: IPvAnyAddress

10

11@flow(log_prints=True)

12def verify_customer():

13 try:

14 url="https://jaypublic.s3.us-west-2.amazonaws.com/customers.json"

15 response = urlopen(url)

16 cust_data = response.read()

17 print(cust_data)

18 cust = Customer.model_validate_json(cust_data)

19

20 # Trigger email verification

21 # verifyEmail(cust)

22 except ValidationError as e:

23 for error in e.errors():

24 print(f'{error["input"]} - {error["msg"]}')

25

26if __name__ == "__main__":

27 verify_customer()You only have to make a few minor changes to turn this into a Prefect flow:

- Create a method - I called ours verifyCustomer() - marked with the Prefect @flow decorator to mark it as a flow. This super-powers your ordinary Python function and gives it a number of additional Prefect-provided features, including retry semantics, persistent state, and logging, among others.You can also turn a flow into a deployment, which provides it with a remotely accessible REST API interface.

- Trigger the flow from the Python file’s __main__ method.

You can run this file by logging into Prefect Cloud (create an account if you don’t have one already): prefect cloud login, and then running: python customer-from-js-flow.py

This gives us the following output:

There’s no sense in triggering our e-mail confirmation workflow if you weren’t even handed a valid e-mail address! By leveraging Pydantic, you can detect and log errors in your workflows. That reduces mysterious workflow failures and, over time, leads to improved data quality.

From here, you can turn your Prefect flow into a deployment, which will enable you to trigger it via a webhook. Just change the line in __main__ to the following to create a deployment: verify_customer.serve(name="verify_customer_deployment")

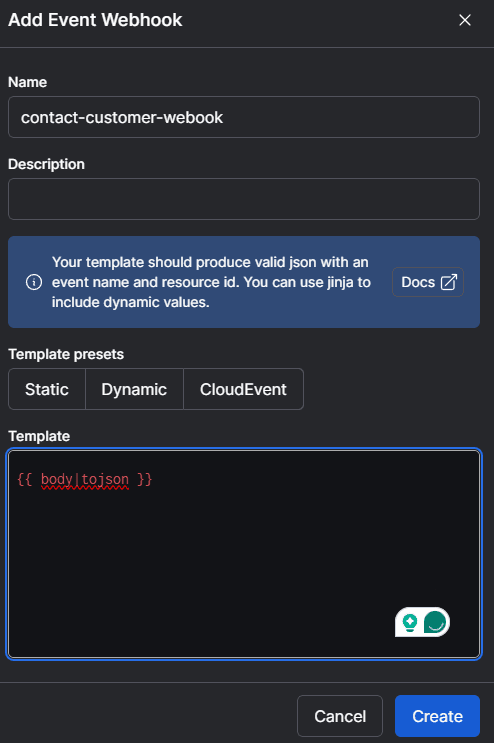

To create a webhook, login to Prefect and, from the navigation menu, select Event Webhooks. Select the + button and then add a dynamic webhook that passes through the JSON body you send in your request:

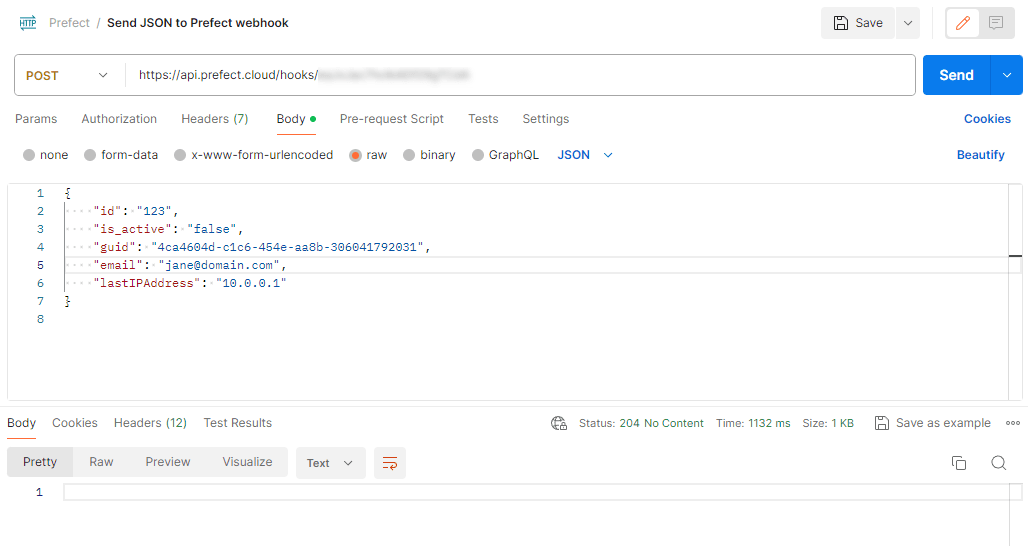

This will create a webhook URL that you can call from any application. For example, you can call it with a simple POST with a JSON body via Postman:

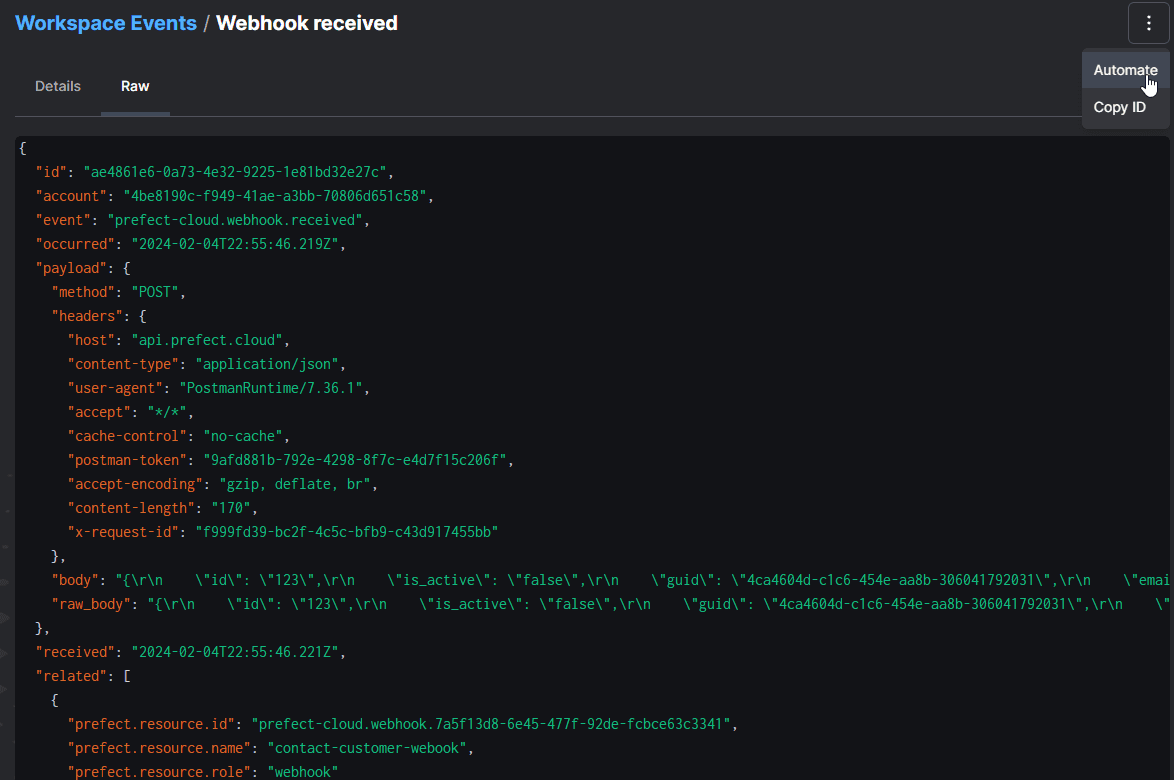

This will create an event in Prefect for the call. From the navigation menu, select Event Feed and click through to the event to see the event with all of its data. From here, you can select the dots in the upper right menu and select Automate to associate your webhook with your deployment as we’ve documented elsewhere:

You can also modify the flow for more fine-grained control over its execution. For example, you could split the validation and e-mail confirmation steps into separate tasks, and specify retry semantics for the email verification task on failure (e.g., if your SMTP server is temporarily unreachable).

Final thoughts

I’ve shown how easy it is to incorporate Pydantic into your Python code - and how you can use Pydantic and Prefect together to create robust workflows. To try it out yourself, sign up for a free Prefect account and create your first workflow.

Related Content