The Hidden Factory of Long Tail Data Work

A critical data pipeline fails. As you investigate, you discover this pipeline, hastily created months ago for a "one-time" analysis, has been running without proper error handling or data quality checks. What should have been a quick fix now requires a complete refactor and redeployment, potentially delaying key business decisions and eroding trust in the data team.

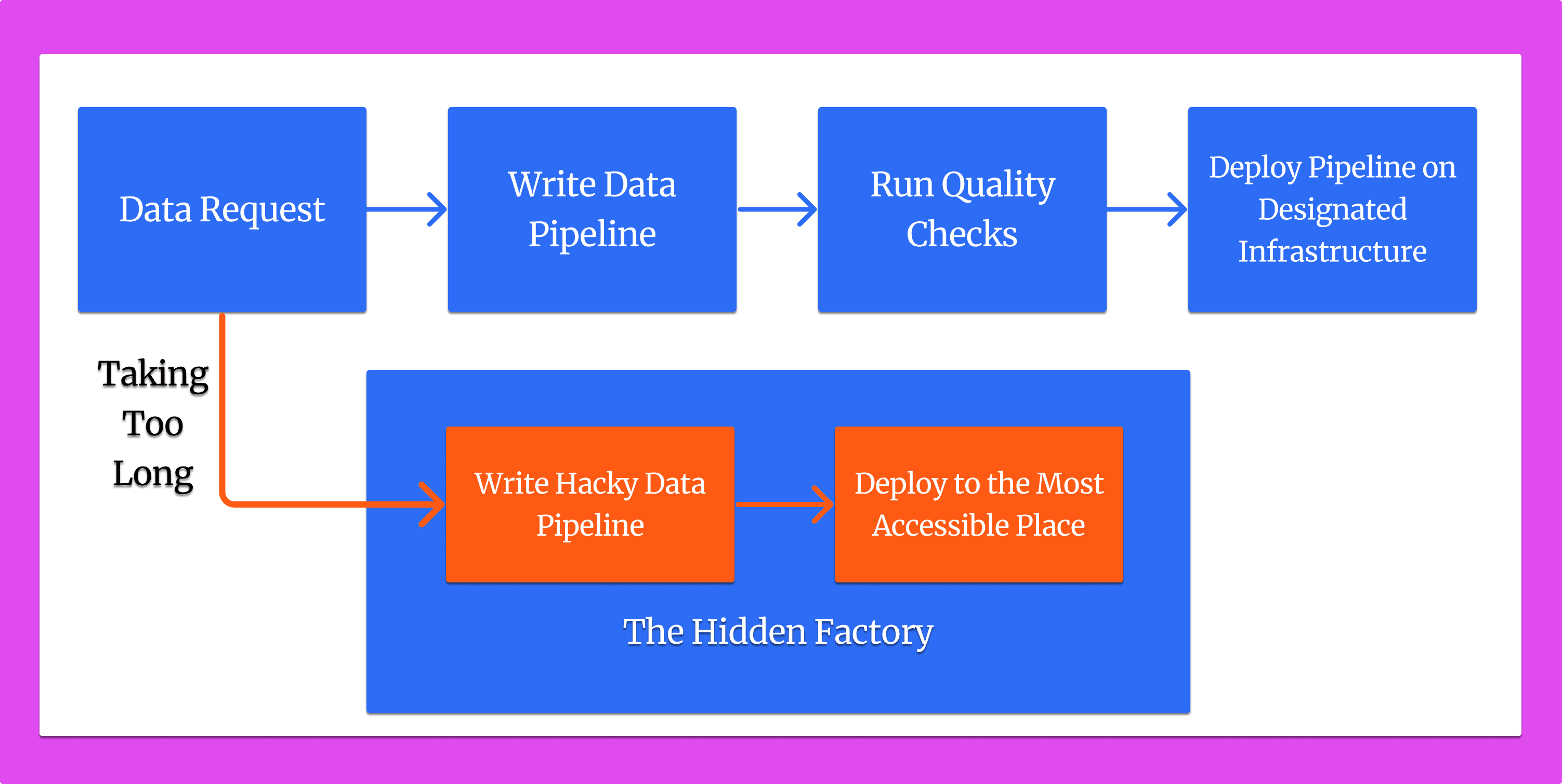

This scenario exemplifies the hidden factory, unseen processes that consume resources without adding value. Hidden factories lead to inefficiencies, wasted resources, and compromised quality.

The term was coined by Armand Feigenbaum, author of “Total Quality Control”, as he observed inefficient work arounds that became institutionalized in a GE factory in the 1960s. The concept has since been broadened and as Dylan Walsh reports: “Nearly any type of work process in any industry can be scrambled by hidden factories.”

Hidden factories are often found in the long tail of data work: diverse, specialized tasks outside standard pipelines. These quick scripts, ad-hoc analyses, and "temporary" solutions can significantly impact team productivity, and ultimately, key performance indicators such as customer churn predictions and revenue forecasts.

Why Hidden Factories Persist in Data Engineering: The Rigidity Trap

Hidden factories in data engineering don't just appear out of nowhere. They're often the result of rigid workflows and inflexible systems that force data professionals to seek workarounds. Let's break down why these hidden factories persist:

- Inflexible tools and processes: Traditional data tools often lack the flexibility required for long tail work. For instance, a data scientist might need to deploy a machine learning model on serverless infrastructure for cost efficiency and scalability, but the organization's standard processes only support VM-based deployments. This rigidity forces the creation of unofficial, potentially brittle solutions.

- Time pressure: Data engineering teams, often understaffed and overwhelmed, become bottlenecks in the data pipeline. When a product manager needs urgent user behavior data for a critical decision, waiting for the data engineering team to create a proper pipeline isn't an option. They might write a quick, unsanctioned script instead, bypassing established processes.

- Skill gaps: Many professionals can write Python scripts but lack knowledge of deployment paradigms or specialized data tools. A data analyst might create a powerful predictive model locally but struggle to integrate it into the organization's data infrastructure, leading to manual, error-prone processes for model prediction pipelines.

- Governance blind spots: As ad-hoc solutions proliferate, they often operate outside the purview of established data governance frameworks. This can lead to inconsistent data handling practices, potential security vulnerabilities, and compliance risks that may go unnoticed until they cause significant problems.

The Fallout: Consequences and Challenges for Data Engineering Teams

With many hidden factories, data engineering teams find themselves constantly in firefighting mode, dealing with failures in poorly constructed pipelines instead of focusing on strategic initiatives. Each ad-hoc solution becomes a piece of technical debt that the team will eventually have to address. And when pipelines break, they often seem to break at the worst possible time.

Inconsistent processes then result in conflicting results across departments. When the sales team's churn predictions don't match the customer success team's figures, it's the data engineering team that bears the brunt of the blame, even if the discrepancy stems from someone else's unofficial process. This loss of trust can have far-reaching consequences - and besides, who wants to spend a meeting discussing why two numbers don’t match? No one.

Then, other teams might get involved: the finance team wonders why redundant efforts are eating up valuable resources; the risk team needs to double click on security vulnerabilities introduced by unmanaged workflows. The loss of trust can extend far beyond the immediate users of the workflow.

Addressing these issues isn't straightforward. Encouraging standardization without stifling the innovation that often comes from these ad-hoc solutions is a delicate balance. Creating a unified approach that satisfies all stakeholders requires careful planning and compromise.

A Solution: Building a Versatile Data Platform

The key to addressing hidden factories lies in building a versatile data platform that can accommodate the diverse needs of long tail data work. For instance, such a platform would support:

- Flexibility and scalability: Create a foundation adaptable to many use cases, from simple data pulls to complex machine learning pipelines. Then workflow authors can write the workflows they need for big or small amounts of data.

- Self-service with built-in security: Enable teams to write and run workflows independently, but within a governed framework so infrastructure resources are not wasted or unmanaged. This could mean building on a workflow framework designed by a data platform engineering team. Ideally this framework would have guardrails so that a workflow author doesn’t have to waste time setting up infrastructure, working insecurely, or accidentally overspending on resources.

- Monitoring and logging: Ensure logs are stored for all workflow tasks and easily visible for relevant stakeholders. Implement alerting, retries, and automated recovery of workflows to limit downtime.

To see what this can look like in practice, consider Cox Automotive's journey. Their Planning Analytics team struggled with managing data pipelines using cron jobs, lacking observability, and grappling with manual retry processes. Self-hosted virtual machines hindered efficient scaling, and the team needed a solution that could cater to both programmers and non-programmers while ensuring high availability and security across nationwide deployments.

The team implemented a solution with Prefect Cloud.

The result was enhanced visibility and automation across hundreds of data pipelines, optimized scalability and resource management, and accelerated deployment processes through a seamless GitHub integration. They left limited infrastructure and ad-hoc code in the rearview mirror, and drove to a seamless scaling system that is version controlled.

By investing in such a platform, organizations can bring their long tail data work into their core data practice. It's not a one-time effort, but an ongoing process of evaluation, adaptation, and improvement. However, with the right approach and tools, data engineering teams can move from constant firefighting in a hidden factory to enabling a truly data-driven mindset within even the largest enterprise organizations.

Curious how Prefect can help you build a versatile data platform? Book a demo here.