Glue Code: How to Implement & Manage at Scale

Ever think, “all I need here is a little bit of code to connect X to Y?”

Who are we kidding? Of course you have. Who hasn’t? However, Y might be a critical process. If that “little bit of code” fails, it could have negative consequences for your business by virtue of being a requirement for critical process Y.

Our systems are full of such “little code” - a.k.a. glue code. In this article, I’ll dive into the nature of glue code, its challenges, and how best to monitor and manage it at scale across your infrastructure to ensure system reliability.

What is glue code?

Glue code connects two or more software systems in different environments or otherwise bridges incompatible processes. It typically runs as a scheduled job on a server or, in today’s cloud-based systems, as a serverless function or Docker container. It’s a tried-and-true technique for stitching together systems in a decoupled manner.

Something usually “smells” like glue code if it has any of these traits:

- It’s used primarily as a routing mechanism - e.g., to send data or information between systems or to automate request routing; and/or

- It unifies remote systems (e.g., other SaaS tools), not under your direct control, that have their own Service Level Agreements (SLAs), issues, etc.

- It relies on remote systems susceptible to transient issues, such as a remote REST API that may error out due to remote server issues or a jittery network connection.

The challenge with glue code

Glue code will typically start as Bash script or Python code running on a virtual machine as a cron job. It’s increasingly common to see this managed using some form of serverless technology - particularly serverless function features, such as AWS Lambda or Google Cloud Functions.

This is fine when you’re talking about one or two functions. However, as your system grows, so does the amount of glue code you’re managing. And that’s when you start to see problems.

1️⃣ No centralized observability. Typically, engineers in your company write glue code in the way that works best for them. That means it can exist in multiple languages spread across multiple technologies - servers/VMs, serverless functions, Docker containers, etc.

Without a single unified platform for observing glue code, you may have code connecting critical processes together that few people know exists. One day, the code triggering Y from X fails - and no one knows where it lives or how it works because its author left the company two months back.

2️⃣ Hard to track changes. Because of its ad hoc nature, a lot of glue code doesn’t sit in a source code repository. Thinking that it “doesn’t fit anywhere,” developers will keep copies on their local machines. That makes it hard (if not impossible) to find the current source of truth and to see who has made what changes.

3️⃣ Hard to monitor and debug. A consequence of the lack of observability is that this code may not be producing the logs and metrics you need to debug it quickly. It might not even be obvious where the process is running. An alert in Slack that the X-to-Y process failed in Slack may trigger a day-long search through the company’s cloud accounts to figure out who implemented that code, where they hosted it, and where it’s spitting out its telemetry.

4️⃣ May not scale. You may have written glue code thinking it’d only run a dozen times a day. But what happens when the business scales and it’s being invoked 100x or more every hour?

Fortunately, technology like serverless functions can scale on demand. But that doesn’t mean the functions themselves are written to scale well. (A function could be using a database with a limited connection pool, for example.)

Again, without observability in place, you may not notice this issue until something fails. You’ll only here about it when a report goes stale, or customers complain about missing order notifications.

How do you manage glue code at scale?

Glue code will always be with us. So the question is: How do we get serious about it? In other words, how do we raise glue code from “just a little bit of code” into a first-class citizen in our application infrastructure?

Prefect exists to answer this question. We built Prefect as a workflow orchestration and observability platform to enable you to structure and observe your workflows consistently across your organization. Powered by Python, Prefect is architecture-agnostic - it can monitor workflows no matter what type of compute you use to power them.

Using Prefect, you can make your glue code:

- Readable

- Observable

- Scalable & reliable

Readable

It’s a truism in our industry that we read code more often than we write it. This is probably one reason so many of us leverage Python for glue code. Truly “Pythonic” code isn’t just efficient, it’s often very readable. That makes it easy for another team member to understand what we did and run with it.

Prefect enhances readability and understandability of glue code by defining a workflow structure. In Prefect, you create your workflows as flows that can be divided further into tasks. You can do this simply without writing any additional code - just add the @flow and @task decorators to your Python functions.

So for example, you could define a workflow that exports data from a remote API as three tasks: fetch the data, clean the data, and then store it in our infrastructure (e.g., in an S3 bucket, Snowflake, etc.).

1import httpx

2from prefect import flow, task

3

4@task(retries=2, retry_delay_seconds=5)

5def get_data_task(

6 url: str = "https://api.brittle-service.com/endpoint"

7) -> dict:

8 response = httpx.get(url)

9 # Do work here

10 return

11

12@task

13def transform_data_task:

14 # Do work here

15 return

16

17@task

18def store_data_task():

19 # Do work here

20 return

21

22@flow

23def get_data_flow():

24 get_data_task()

25 transform_data_task()

26 store_data_task()This structure makes it easy for someone looking at your code for the first time to get what’s going on. It also makes it easier to debug the code when there’s an issue, as you can hone in on the code in the failing task.

Observable

Glue code is deeply coupled with the orchestration technology of your architecture. It connects and bridges together parts of your system so they work together in a harmonious whole (even if no one ever intended them to).

However, if glue code is spread across your architecture, you may not be able to observe it in a holistic manner. For example, an AWS Lambda function may be trailing its output to a CloudWatch log in one of the dozen+ AWS accounts your org operates. Meanwhile, a Bash script running on some virtual machines may be cat’ing console messages to /logs/gluecode/noonebutmeknowsthisexists.log, which its author occasionally logs in to tail to make sure things don’t “look wonky”.

Observability is more than just logs. Observability provides the tools you need to see the state of a complex distributed system at any time and dive deep into any reported or suspected issue. It does this partly by bringing all of your running processes under one roof so that you can track and observe them all-up.

This is a big piece of what Prefect brings to glue code. Using Prefect, you can implement glue code directly in Python, run it, and observe it from a single platform. You can also define Prefect workflows that collect notifications, logs, and metrics from processes running externally - serverless functions, Docker containers, or any other external process.

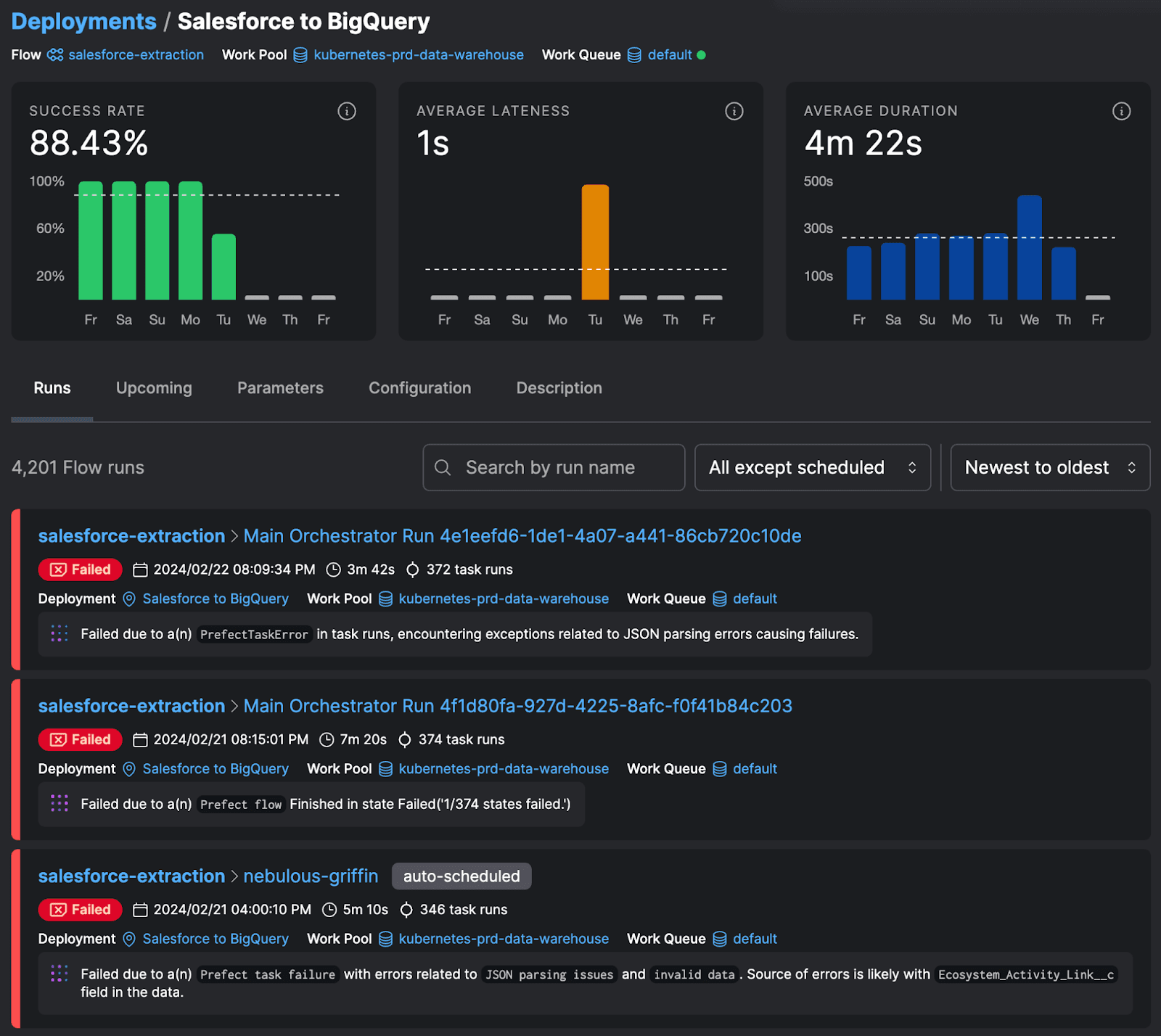

Prefect also provides a rich interface showing the exact state of your flows and tasks. If a flow fails, you can see exactly which task within the flow failed and jump straight into the logs, giving you all the information you need to figure out what went wrong and how to correct it. That reduces mean time to recovery, which minimizes lost time and revenue should a critical business process error out.

Scalable & reliable

Glue code inherently helps with system scalability. By acting as a bridge between systems, it keeps them loosely coupled and reduces hard dependencies.

This is one reason why you may rely on serverless features, such as serverless functions, to implement glue code. Features like AWS Lambda can scale on demand, which reduces the odds they’ll become a choke point in your architecture.

Prefect enhances scalability by enabling you to run on any scalable architecture you need. You can use any cloud technology in conjunction with Prefect, leveraging features such as work pools to define custom units of computing capacity. For example, you can leverage existing resources such as a cluster of virtual machines or a Kubernetes instances.

If you’re tired of managing compute in any form, Prefect can help with that, too. You can use managed execution pools to tell Prefect to spin up compute on your behalf - no cloud account needed.

Furthermore, you can leverage Prefect to increase system reliability. With retries, for example, you can use a Python decorator to rerun failed tasks automatically. The following code shows how to add a retry to a task calling an external API endpoint, specifying that Prefect should retry the task up to two times with a delay of five seconds between calls.

1import httpx

2from prefect import flow, task

3

4@task(retries=2, retry_delay_seconds=5)

5def get_data_task(

6 url: str = "https://api.brittle-service.com/endpoint"

7) -> dict:

8 response = httpx.get(url)

9

10 # If the response status code is anything but a 2xx, httpx will raise

11 # an exception. This task doesn't handle the exception, so Prefect will

12 # catch the exception and will consider the task run failed.

13 response.raise_for_status()

14

15 return response.json()Many homegrown glue code solutions aren’t built to withstand failure. By bringing your glue code into Prefect, you can enable developers to add more stability to their code with minimal coding. You can also ensure everyone’s coding their workflows to the same standards.

See how Prefect can bring greater readability, observability, and scalability to your glue code for yourself - sign up for a free account and try out the first tutorial.

Related Content