If You Give a Data Engineer a Job

Inspired by a talk delivered by our CTO, Chris White, in 2021.

If you give a Data Engineer a job

If you give a data engineer a job, they’re going to write a Python script.

When they write the Python script, they’re probably going to want to run it on a schedule.

When the job completes, they’ll want to confirm that it succeeded.

Then they’ll want a dashboard to make sure that the job keeps running successfully.

When they do look at the dashboard, they might notice that a job run failed.

So they’ll probably ask for retries.

When they’re finished adding retries to the job, they’ll want more observability.

They’ll start logging events.

They might get carried away and observe every part of the job.

They may even end up observing the infrastructure that the job runs on and the services it interacts with.

When they’re done, they’ll probably want to share the information with their team.

They’ll have to set up a web application with security and permission controls.

They and their team will sign in, make themselves comfortable and analyze the information.

When they look at the information, they’ll get so excited they’ll want to observe jobs of their own.

One thing leads to another

The lesson of If You Give a Mouse a Cookie is simple: one thing leads to another. But it’s hard to predict what each thing will lead to. Sure, the ideal project starts out with ample resources, sufficient time, and clear requirements, but how often does your project start anywhere close to that ideal?

If you’re like most people - your typical project starts out with a one-off question that needs to be answered ASAP. If you have all the data you need in your warehouse and your question can be expressed in SQL, a query might do the trick. But the moment that you need data from two or more systems, or need a valuable library, you’ll probably write some Python.

You answered the question successfully - your business stakeholders are psyched! So psyched, in fact, that they have more questions. By the way, they also want you to start giving them updated answers to those questions every week. Oh, and if this goes really well, they may even start including analysis like this in your company’s product.

At this point, anything can happen. Maybe everyone will lose interest in this new thing next week and you’ll start the process over again with a new question. Or maybe this new thing will grow into a series of insights that transforms your entire organization. You have no way of knowing.

Perhaps you’re a pessimist. You’ve got a lot of other things to do, and this new thing probably won’t amount to much. So you’ll just run your script on some machine, with cron kicking it off each week. It’ll probably work. And it does - for a while. Until one day, something unexpected happens. Maybe your platform team updates the machine without notifying you. Maybe the web service that you’re pulling from is down. Whatever it is, you get a call one evening from a frustrated business stakeholder, who works for a frustrated executive, who says that the numbers don’t add up and that she can’t trust any of the information your team is giving her. Now you’re frustrated too, because you’re spending your evening fixing the problem. The worst part is that you don’t know what the problem is or when it happened, since the script execution wasn’t observable. Don’t be a pessimist.

Perhaps you’re an optimist. You’re certain that this new thing is going to be the thing that puts your team on the map and gets you the support you need. You spend all week modeling the inputs and the outputs meticulously, adding data quality checks, and designing for every possible failure mode. It’s not just a script, it’s a pipeline. It’s a thing of beauty. All of your pipelines should be this way. If only you had time to make them that way. The next week, the business stakeholder comes back and asks you about the new thing. You tell them the new thing is the best thing since sliced bread. They take a look and say “Oh, I meant the other new thing. You know, the thing before this thing.” I won’t tell you not to be an optimist, but I think you’ll find yourself a bit less optimistic after a few situations like this.

Perhaps you’re neither an optimist nor a pessimist. You’ve seen this show before. You’ve heard about YAGNI and evolutionary architectures. So you decide that you’ll invest in this new thing in proportion to how much value it’s creating. Each time the new thing demonstrates more value, you’ll improve it, continuously. Hey, thats a neat concept, there should be a name for it.

Start Simple

Right now, all you need to do is get the new thing running on a weekly schedule. You’ve heard that Prefect 2 has expressive, easy to use scheduling, so you try it out. You install Prefect and jump into the docs. You find that Prefect has a concept for a specific piece of code - a flow. You read that you can turn any script into a flow by adding just two lines of code, one import statement and one flow decorator, to the primary file - the_new_thing.py. You add them.

Easy enough, but what have you gained? To find out, you run the script again, just as you did before, and you see that the output is different:

115:27:42.543 | INFO | prefect.engine - Created flow run 'jumpy-puppy' for flow 'the_new_thing'

2Its gonna be big!

315:27:42.652 | INFO | Flow run 'jumpy-puppy' - Finished in state Completed()

442Now, the new thing is a flow. Prefect has a unique name for the specific time that this flow ran - jumpy-puppy. Prefect also knows:

- When the script started running

- When it finished running

- How long it took to run

- Whether it completed successfully

Notice that you didn’t have to stand up a server somewhere to do this. It just worked.

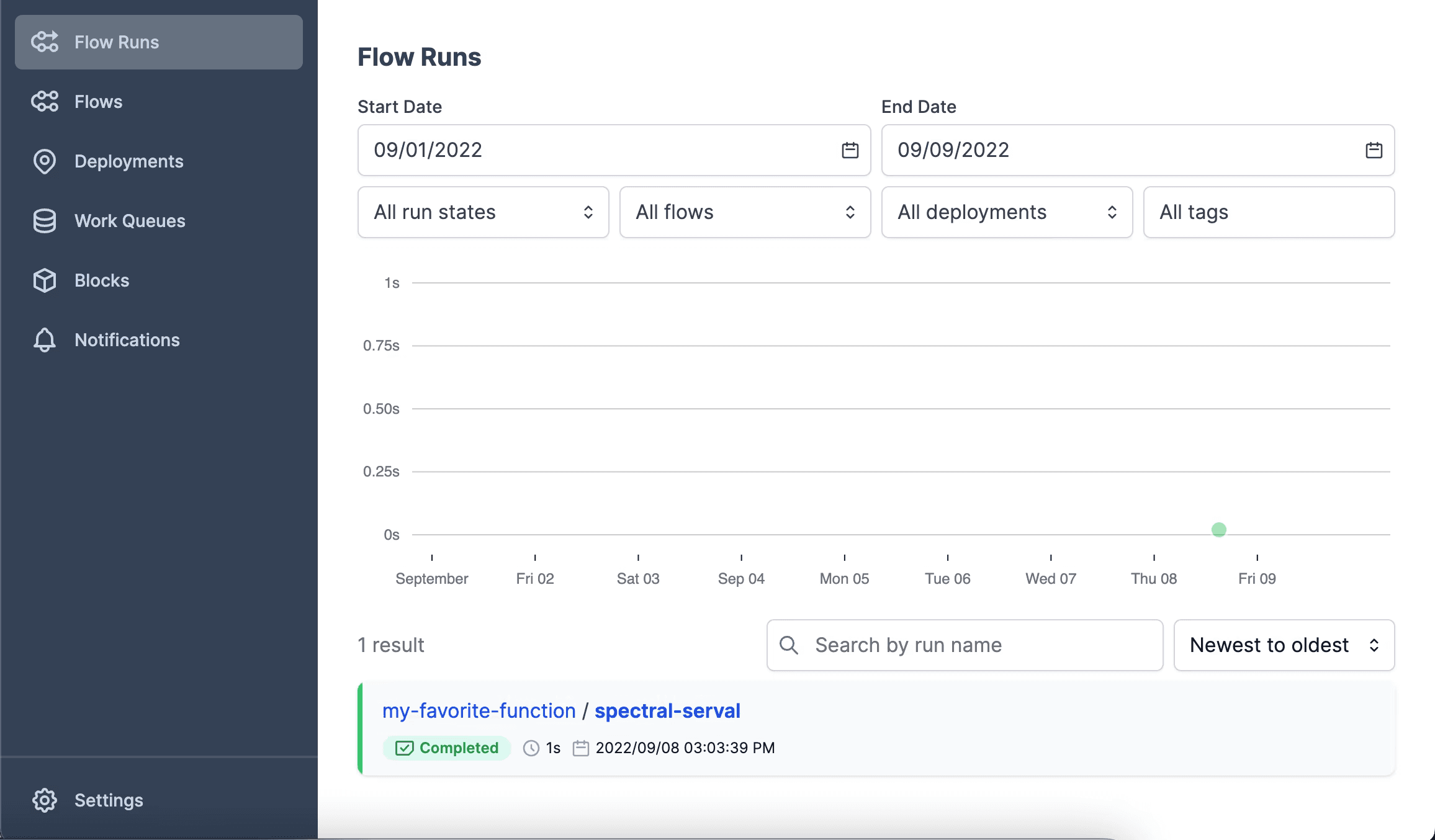

OK, cool, that’s something, but what can you do with this information? Well, imagine that you had a record of this information every time you ran a flow. You’d be able to see if the duration increased, perhaps because a query was taking longer. Of course, you’ll need a way to view the information. With just one more terminal command, prefect orion start, you’ve started an Orion server. Don’t worry, it doesn’t need a big cluster. You can run it on any laptop. You open your favorite browser, go to http://127.0.0.1:4200, and there’s your flow run.

That’s nice and all, but you need to get the new thing running on a weekly basis and get on to the next thing. To do this, you only need to understand one more concept - a deployment. With the Orion server running, you can create a deployment with just a single CLI command and set the schedule from the UI.

Now, you’re off to the races. With a grand total of two lines of code, two CLI commands, and a couple of clicks, you’ve gained basic observability for each run of the the new thing. If you leave the Orion server running, the new thing will run on the schedule you specified, with a persistent, informative record of runs, conveniently accessible in a beautiful UI.

Mission accomplished. You set it and forget it. Weeks pass.

Incrementally improve with Prefect

From here, you’re in a choose-your-own-adventure story - except its not you choosing, its just life. There are many possible paths, but all of them start, as our days often do, with a chat message.

Yup, you can see in the Prefect UI that the most recent run of the new thing flow failed. You run it again and it succeeds. Weird. Its not worth troubleshooting now, but you figure that you’ll at least set a notification to alert you or your team when this flow fails next time.

Well, at least your notification worked. How convenient that it was right in the same messaging service that you’re already using. You visit the UI again and see that the flow run started and ended at the exact same time as when it last failed. Maybe something is timing out? You think your database connection could be finicky. You return to the Prefect docs and find that any functions in your flow can be further broken down into tasks, and that tasks support retries. You make the function that opens your database connection into a task and specify that it should retry up to three times before failing. During a test run, you see that you can now track runs of that task just as you can track flow runs.

The next time the flow runs, you don’t get a failure notification. That’s good, but you don’t quite trust it, so you go over to the UI and see that the database connection task did in fact fail the first time it ran, but was successful the second time. Good to know. Back to work on other stuff.

You’re no distributed computing expert (or maybe you are, but I’m not), but you read a bit about Prefect Task Runners and learn that Prefect makes it easy to start running on Dask and Ray. With some tweaks to your code and some configuration modifications, the new thing is now running on Dask in less than half the time. Money.

After some back and forth, you realize you can support this request by just parameterizing your existing script. Prefect makes it easy to parameterize flows. You create a second deployment of the same flow that runs on the same schedule, but with different parameters. Now both teams are satisfied.

It sounds like the new thing is gaining traction! You create another deployment, as you did before, but you’re a little worried about having all of these things running at the same time on the same machine. You learn a bit about Prefect’s work queues and find that flow run concurrency limits are trivial to add. You set a limit of 1 and can rest assured that only one flow run will be in progress at a time.

Oh boy, a nastygram. You’ve already set up flow run concurrency limits, but database connections are opened by tasks. You read about Prefect’s task runners and see that you can add task run concurrency limits and manage them with tags. You add a “prod-database” tag to the relevant tasks and run a single command prefect concurrency-limit create prod-database 1. Thats one less thing for you and your database admin to worry about.

You’re no DevOps expert (or maybe you are, but I’m not), but you’ve heard that Prefect makes flows portable with infrastructure blocks, which give you a straightforward way to specify infrastructure for flow runs created by the deployment at runtime. You head over to the UI, enter the relevant configuration values, and update your existing deployments to specify the new block. Wow, that was easy.

A tool that grows with your code

When a new project starts, there’s no way to know how it will evolve. It makes sense to start simple. To get started with Prefect, you only need to understand one new concept - a flow. To run that flow on a schedule, in a remote location, or with editable parameters, you need just one more concept - a deployment. From there, you can learn other concepts to enable new features independently, only as you need them. You can continuously adapt and improve your flow in response to the changes that will inevitability come. As projects proliferate, each one can use as much or as little of Prefect as it needs.

Prefect makes complex workflows simpler, not harder. Try Prefect Cloud for free for yourself, download our open source package, join our Slack community, or talk to one of our engineers to learn more.

Related Content