Data is Mail, Not Water

Stop stressing about loops and if statements in data pipelines.

Data folks often think about the things we build as “pipelines.” The pipeline mental model has served us well, but it has also constrained our thinking and forced us to contort our code, and ourselves, into unnatural shapes.

Data is mail, not water

Imagine how water gets to your home. It flows through pipes, of course. The pipes are linear and fixed. The water’s path isn’t determined by any property of the water itself, but rather by a designer ahead of time. The water can only be controlled with valves at decision points that the designer anticipated. Airflow, the most successful first generation workflow orchestrator, is the ultimate pipeline builder. Its static workflows are useful for modeling linear, fixed processes.

Now imagine how mail gets to your home. It’s probably delivered via a postal worker traveling along a route. The route is often the same, but not always. There may be a road closure along the way, demanding that the postal worker adjust the route. There may be an especially high volume of mail that could demand a different route. Importantly, the postal worker has information available to them along their route that couldn’t possibly be known ahead of time. The absence of a precisely specified, unalterable path makes mail delivery more resilient, not less.

Data-intensive processes are more like mail delivery than water delivery.

Data is dynamic, workflows should be too

Data intensive processes are inherently non-linear. After all, data is a reflection of the complex, asynchronous world around us. Modern workflow orchestrators accept and embrace this reality. They’re designed for dynamic workflows.

A dynamic workflow is a series of tasks — code to be executed — that each run in accordance with dependencies that are discovered at runtime — as the code is executing. Dynamic workflows don’t require the engineer to know exactly how they will unfold ahead of time. Instead, they allow decisions about the path that the workflow will take to be deferred to runtime, when the workflow has more context.

A random walk down data street

With Prefect, a modern workflow orchestration platform, workflows are expressed in Python, with its full functionality, including expressions like conditionals and loops. Let’s explore what’s possible with Prefect through a simple Python script:

1from prefect import flow, task

2import random

3import time

4

5@task

6def get_house_count():

7 houses = random.randint(1, 5)

8 return {"houses": houses}

9

10@task(retries=3)

11def visit_house(count):

12 # Is there an obstacle?

13 obstacle = random.randint(1, 20)

14 if obstacle > 19:

15 raise Exception("I can't pass, there's an obstacle in the way.")

16 else:

17 mail = random.randint(0, 4)

18 packages = random.randint(0, 2)

19 delivery = [mail, packages]

20 return delivery

21

22@task

23def deliver_package(packages):

24 time.sleep(2)

25 print("Delivered package.")

26

27@task

28def deliver_mail(mail):

29 time.sleep(1)

30 print("Delivered mail.")

31

32@flow

33def walk_route():

34 print("Starting route...")

35 houses = get_house_count()

36 for h in range(houses["houses"]):

37 print ("Delivering to house #" + str(h) + " of " + str(houses["houses"]))

38 delivery = visit_house(houses)

39 for i in range(delivery[0]):

40 deliver_mail(delivery)

41 for j in range(delivery[1]):

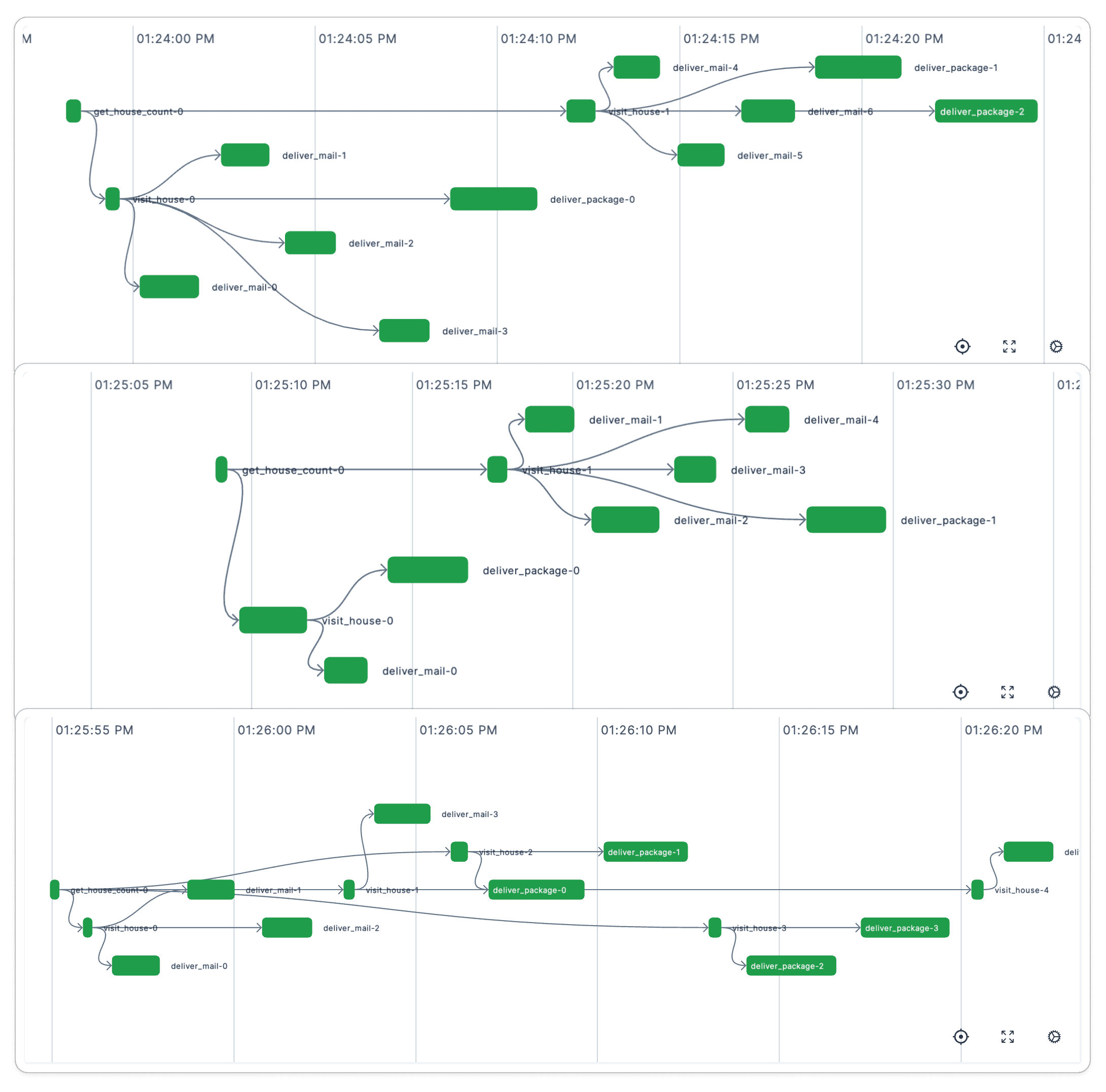

42 deliver_package(delivery)The main function, walk_route, is the flow - the place the workflow logic is specified and starts. It calls three other functions, visit_house, deliver_mail, and deliver_package, which are all tasks in the workflow. Its simple code, but it can be used to model complex behavior. In this case, the number of houses, pieces of mail, and count of packages have all been randomized. While the code itself is static, the path taken through it varies depending on the data it handles. Each time this flow runs, a different workflow unfolds:

These graphs aren’t merely traces of the code execution. At each node in each graph, Prefect determined whether and how the workflow should proceed, in accordance with features like retries, caching, concurrency limits, and more.

This is a toy example, but you can imagine the inherent randomness in the workflows you manage. The most common issues — schema changes, web service interruptions, quality check failures, etc. - are all outside your flow’s control. Your workflow could simply fail, cease execution, and await manual intervention as it forges along its preordained path, or it could react and adjust its path in real time.

Workflow orchestration should elevate code, not constrain it

Legacy orchestrators, and the pipeline mental model embedded in them, have made us think in static terms. Dynamic workflows aren’t so much about embracing a new way of thinking as they are about casting off the shackles of this old way of thinking. Your data isn’t water, it’s mail. Don’t coerce your code into linear pipes. Elevate your code into dynamic workflows that react to their environment and the data they handle when they run.

Prefect makes complex workflows simpler, not harder. Try Prefect Cloud for free for yourself, download our open source package, join our Slack community, or talk to one of our engineers to learn more.

Related Content