Anaconda and Prefect

Scaling with Prefect

About Anaconda

Anaconda powers the modern data ecosystem. Our goal is to build data literacy and create a platform where data science users, open-source contributors, AI researchers, and technology providers can discover, share, and sell their innovations.

Packages are one of the ways we deliver on this goal, and they are also one of the key reasons people choose to leverage Anaconda. With thousands of packages available in our repositories via the conda package manager, we help data and ML teams get tooling set up quickly, so they can spend more time solving problems and less time fighting to set up languages and libraries.

The challenge: building packages is tricky

On the surface, 'conda install' seems simple. You run it, wait a few seconds, and the package you asked for is ready to use. However, a lot of work happens behind the scenes to enable the quick package installation that has become an essential part of the Anaconda experience.

New versions and security fixes are created by the open source community everyday, and we need to update our packages to these latest versions in order for our customers to use them with confidence.

Anaconda builds thousands of open source packages written in many different programming languages (Python, C, Fortran, etc.) using different build systems (cmake, bazel, etc.) and each package needs to be built on each of the seven platforms we support:

- 4 Linux builds (x86_64, aarch64, ppc64le, s390x)

- 2 macOS builds (x86_64 and arm64)

- 1 Windows builds (x86_64)

One of Anaconda’s goals is to be able to bootstrap new platforms easily. Most CI/CD tooling has agents that would require significant work to get running on a new platform. Luckily, Prefect is implemented in pure Python which means that as soon as we can run any of our software on it, we can also do automated builds.

Beyond that, there is a great diversity of tasks that builds must complete successfully.

- Builds of the same package on multiple different platforms.

- Managing a set of heterogeneous (i.e. cloud + on prem) build workers.

- GPU packages need to be tested on different computers that are setup differently, and need to be provisioned dynamically due to their expense.

- Large groups of packages need to be built and tested together

Unlike traditional CI/CD tools, Prefect just runs Python code and keeps track of the inputs and outputs. This allows us to build arbitrarily complex pipelines which are hard to configure with other tools.

Other Prefect features which are proving very useful:

- Prefect is open source and we can modify the agent to suit our needs if necessary.

- Prefect has a rich API which can be used to query for build information.

The solution: creating a scalable package build pipeline

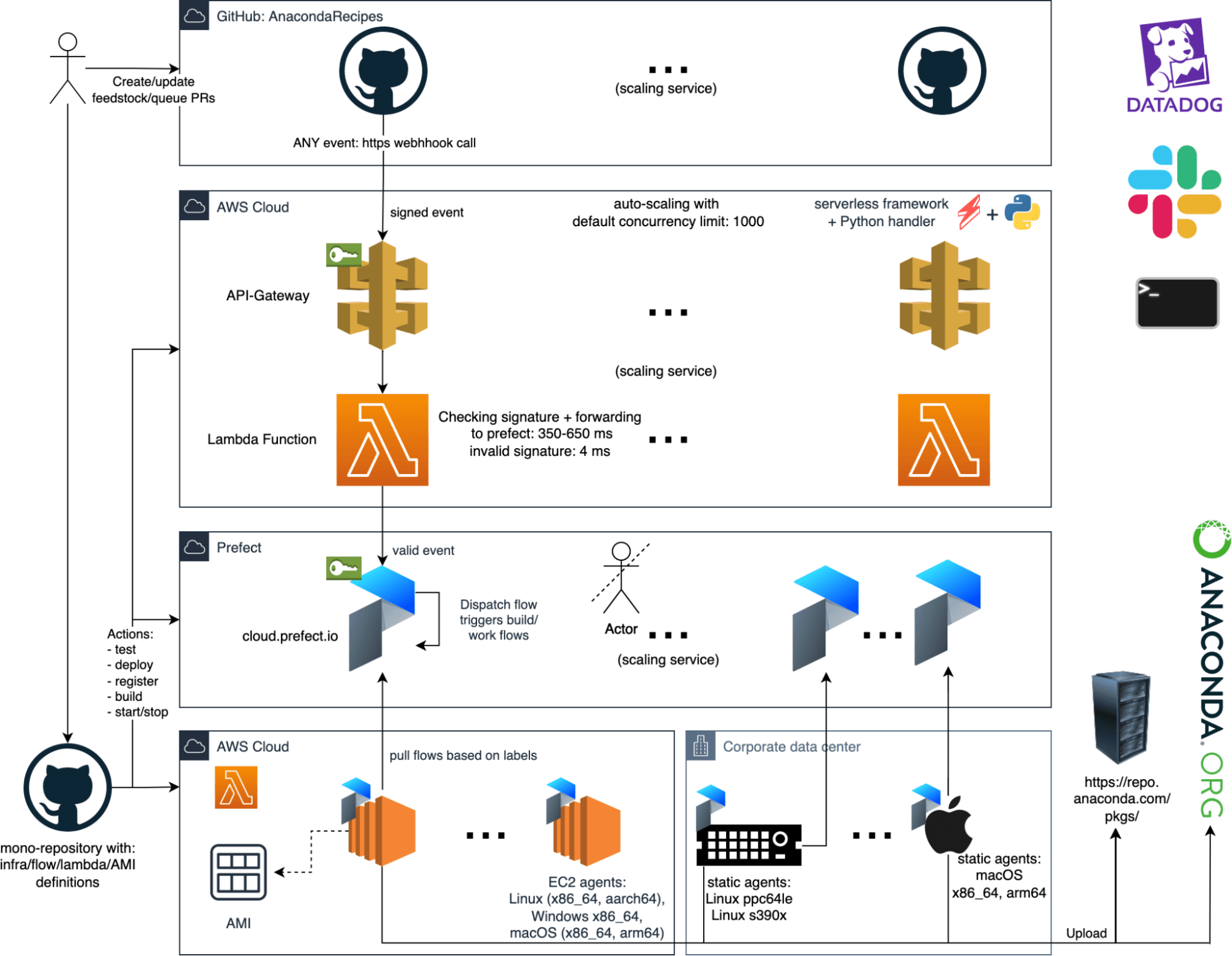

To meet our build requirements' unique challenges, we built an event-driven build pipeline powered by a combination of excellent tools.

At a high level, our build pipeline is comprised of the following steps:

- A GitHub pull request to one of our recipe repositories triggers a webhook call to AWS to start a build.

- Our AWS API Gateway runs a Lambda function to validate the event and ensure we want to start the build process.

- If the event is valid, the Lambda function creates a flow run in Prefect Cloud. To help automate the build process, we built a CLI tool that interacts with the Prefect API and creates new flows as needed.

- The main Prefect flow interprets the data provided by the event and starts build subflows for each of the required platform/architecture combinations in the build matrix.

- Prefect agents running on ephemeral EC2 instances (using AMIs) and static machines (ppc64le, s390x) serve as build agents that begin running the build subflows as soon as they get triggered.

- Each build runs either in ephemeral Containers (all the linux builds) or bare metal on macOS and Windows

- As each build flow runs, it provides status updates, collects live logs, and ultimately provides a red/green status back to the GitHub pull request it builds, indicating whether each build flow was successful.

- A Lambda-based bot queries the Prefect Cloud Queue for stuck builds and pushes updates to Slack for the team to look at.

- When builds are complete, Prefect Cloud provides a searchable interface that makes it easy to find and view the status of current and past build flows.

The result is a build pipeline that meets our needs better than any off-the-shelf tools we tried. So while CI/CD tools are the right choice for the majority of software teams, it's important to assess your needs and decide if it’s worth the development and maintenance effort to architect your own build pipeline.

Given the scale at which Anaconda needs to build and deploy packages and the number of users who rely on us, we saw significant business value in a build pipeline tailored to our needs.

Summary

Building packages at scale is complex. Even with good tools to run every individual build, coordinating and reporting on the build process at scale takes a lot of work.

Our event-driven cloud build pipeline leverages the strengths of GitHub, AWS, and Prefect to automate much of the workload. GitHub provides a reliable platform to store recipes and trigger build events. AWS provides the infrastructure to build packages at scale quickly. And Prefect adds observability and reliability to the pipeline by ensuring that all builds launch, run, and report their results.

Together, these tools give us a pipeline that provides great insight into our build process and ensures that our ever-growing user base can get the up-to-date packages they need, when they need them.

Related Content