Deploy your models to prod faster

Elevate your Python code to bulletproof workflows with Prefect.

1from prefect import flow

2

3@flow

4def main_flow(

5 train_path: str = "./data/green_tripdata_2021-01.parquet",

6 val_path: str = "./data/green_tripdata_2021-02.parquet",

7) -> None:

8 """The main training pipeline"""

9

10 # MLflow settings

11 mlflow.set_tracking_uri("sqlite:///mlflow.db")

12 mlflow.set_experiment("nyc-taxi-experiment")

13

14 # Load

15 df_train = read_data(train_path)

16 df_val = read_data(val_path)

17

18 # Transform

19 X_train, X_val, y_train, y_val, dv = add_features(df_train, df_val)

20

21 # Train

22 train_best_model(X_train, X_val, y_train, y_val, dv)Testimonial

“I used the parallelized hyperparameter tuning with Prefect and Dask (incredible) to run about 350 experiments in 30 minutes, which normally would have taken 2 days”

Andrew Waterman

Data Scientist, Actium

Deploy your models to prod faster

Don't change your code for your orchestrator: Prefect is pythonic, and doesn't require boilerplate.

- Scheduling & orchestration: from model training to pipelines

- Flexible infra options, including up to 250 free hours of Prefect-managed compute

- Turn your models into hosted APIs, making them accessible to anyone programmatically

get_stars.py

1import httpx

2from prefect import flow

3

4

5@flow(log_prints=True)

6def get_repo_info(repo_name: str = "PrefectHQ/prefect"):

7 url = f"https://api.github.com/repos/{repo_name}"

8 response = httpx.get(url)

9 response.raise_for_status()

10 repo = response.json()

11 print(f"{repo_name} repository statistics 🤓:")

12 print(f"Stars 🌠 : {repo['stargazers_count']}")

13 print(f"Forks 🍴 : {repo['forks_count']}")

14

15

16if __name__ == "__main__":

17 get_repo_info.serve(name="my-first-deployment")Focus on your code, not the infra

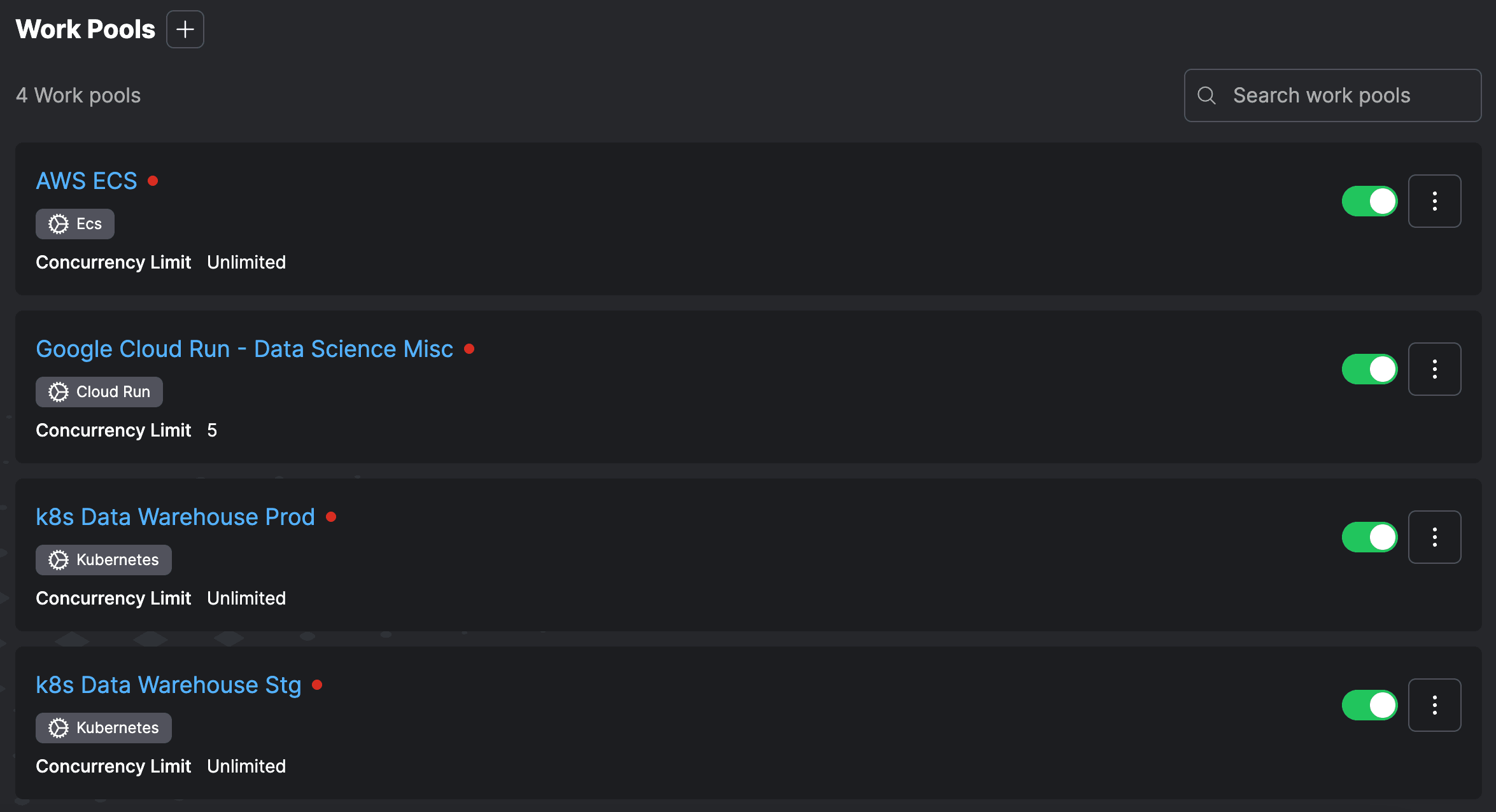

Prefect’s hybrid execution model means your code just works, locally or in production.

- Spin up a Prefect server for local development

- Simplified infrastructure concepts means you can get into production fast, and scale up without contorting your code

- Move from local to remote or between cloud services with ease

Make your work accessible

Add @flow to your code and turn it into a callable, hosted API. Let anyone interact with your models, track their usage, and understand failure instantly.

- Easily schedule your workflows, or build event-driven pipelines

- Automatically generated UI for running flows and taking inputs

- Hosted API for programmatically interacting with your deployments

- Native interactive workflows for taking input at runtime

1{

2 "state": {

3 "type": "SCHEDULED",

4 "name": "string",

5 "message": "Run started",

6 "data": null,

7 "state_details": {},

8 "timestamp": "2019-08-24T14:15:22Z",

9 "id": "497f6eca-6276-4993-bfeb-53cbbbba6f08"

10 },

11 "name": "my-flow-run",

12 "flow_id": "0746f03b-16cc-49fb-9833-df3713d407d2",

13 "flow_version": "1.0",

14 "parameters": { },

15 "context": {},

16 "parent_task_run_id": "8724d26e-d0dd-4802-b7e1-96df48d34683",

17 "infrastructure_document_id": "ce9a08a7-d77b-4b3f-826a-53820cfe01fa",

18 "empirical_policy": {},

19 "tags": [],

20 "idempotency_key": "string",

21 "deployment_id": "6ef0ac85-9892-4664-a2a5-58bf2af5a8a6"

22}

Learn About Prefect

Any use case

A versatile, dynamic framework

From model serving to complex operational workflows: if Python can write it, Prefect can orchestrate it.

- Events & webhooks

- Automations

- DAG discovery at runtime

dynamic.py

1from prefect import flow, task

2

3@task

4def add_one(x: int):

5 return x + 1

6

7@flow

8def main():

9 for x in [1, 2, 3]:

10 first = add_one(x)

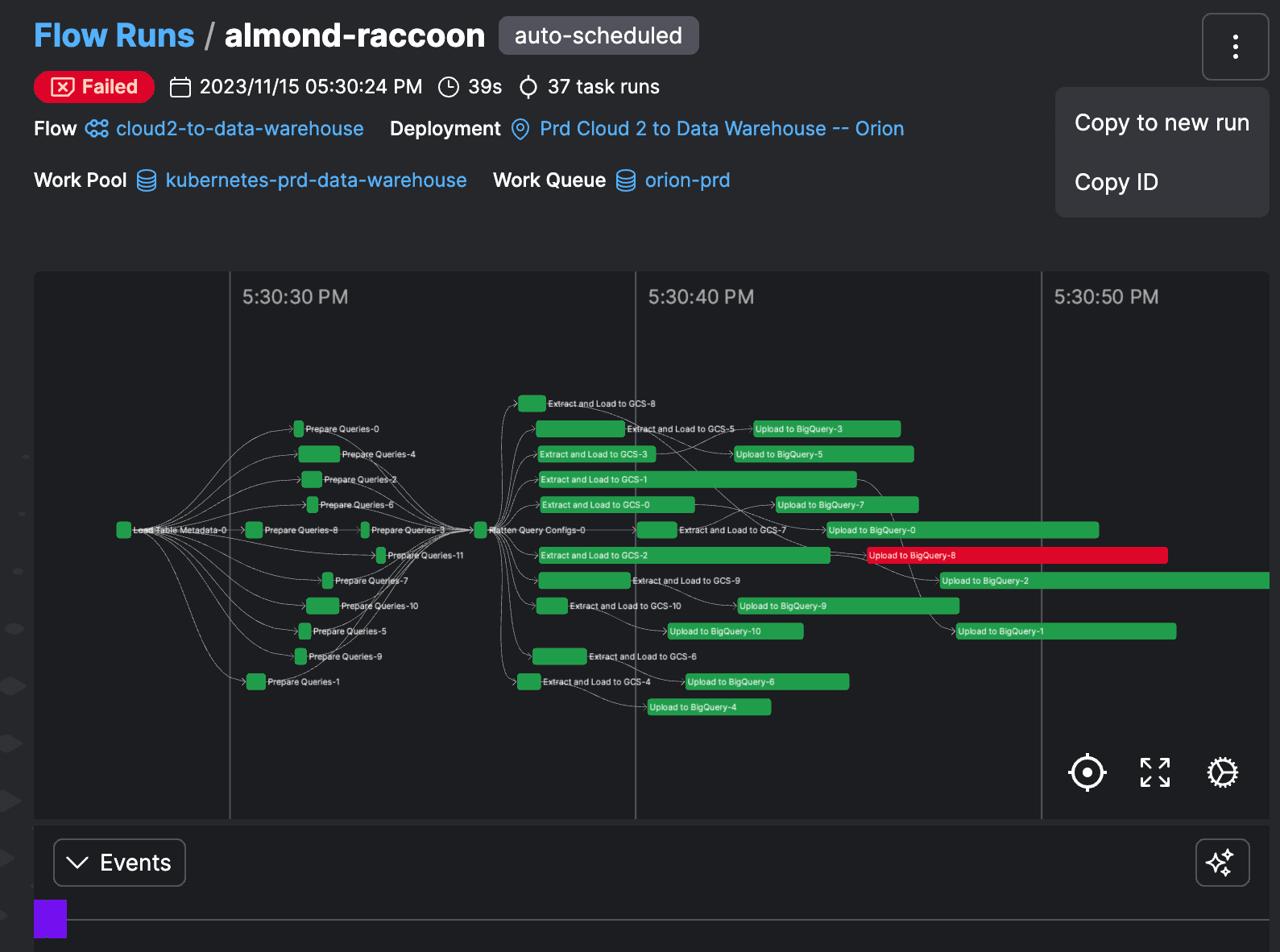

11 second = add_one(first)Always know the status of your work

Proactive insights let you monitor the state of your work, in totality or at the individual level. Granular controls let you keep stakeholders informed with notifications and their own UI to explore.